AI isn’t the app, it’s the UI

AI isn’t the app, it’s the UI

original address:AI isn’t the app, it’s the UI (opens new window)

A realistic understanding of generative AI can guide us to its ideal use case: not a decision-maker or unsupervised agent tucked away from the end user, but an interface between humans and machines.

Large language models (LLMs) feel like magic. For the first time ever, we can converse with a computer program in natural language and get a coherent, personalized response. Likewise with generative art models such as Stable Diffusion, which can create believable art from the simplest of prompts. Computers are starting to behave less like tools and more like peers.

The excitement around these advancements has been intense. We should give the skeptics their due, though: human beings are easily swept up in science fiction. We’ve believed that flying cars, teleportation, and robot butlers were on the horizon for decades now, and we’re hardly discouraged by the laws of physics. It’s no surprise that people are framing generative AI as the beginning of a glorious sci-fi future, making human labor obsolete and taking us into the Star Trek era.

In some ways, the fervor around AI is reminiscent of blockchain hype, which has steadily cooled since its 2021 peak. In almost all cases, blockchain technology serves no purpose but to make software slower, more difficult to fix, and a bigger target for scammers. AI isn’t nearly as frivolous—it has several novel use cases—but many are rightly wary of the resemblance. And there are concerns to be had; AI bears the deceptive appearance of a free lunch and, predictably, has non-obvious downsides that some founders and VCs will insist on learning the hard way.

Putting aside science fiction and speculation about the next generation of LLMs, a realistic understanding of generative AI can guide us to its ideal use case: not a decision-maker or unsupervised agent tucked away from the end user, but an interface between humans and machines—a mechanism for delivering our intentions to traditional, algorithmic APIs.

# AI is a pattern printer

AI in its current state is very, very good at one thing: modeling and imitating a stochastic system. Stochastic refers to something that’s random in a way we can describe but not predict. Human language is moderately stochastic. When we speak or write, we’re not truly choosing words at random—there is a method to it, and sometimes we can finish each other’s sentences. But on the whole, it’s not possible to accurately predict what someone will say next.

So even though an LLM uses similar technology to the “suggestion strip” above your smartphone keyboard and is often described as a predictive engine, that’s not the most useful terminology. It captures more of its essence to say it’s an imitation engine. Having scanned billions of pages of text written by humans, it knows what things a human being is likely to say in response to something. Even if it’s never seen an exact combination of words before, it knows some words are more or less likely to appear near each other, and certain words in a sentence are easily substituted with others. It’s a massive statistical model of linguistic habits.

This understanding of generative AI explains why it struggles to solve basic math problems (opens new window), tries to add horseradish to brownies (opens new window), and is easily baited into arguments about the current date (opens new window). There’s no thought or understanding under the hood, just our own babbling mirrored back to us—the famous Chinese Room Argument (opens new window) is correct here.

If LLMs were better at citing their sources, we could trace each of their little faux pas back to a thousand hauntingly similar lines in online math courses, recipe blogs, or shouting matches on Reddit. They’re pattern printers. And yet, somehow, their model of language is good enough to give us what we want most of the time. As a replacement for human beings, they fall short. But as a replacement for, say, a command-line interface? They’re a massive improvement. Humans don’t naturally communicate by typing commands from a predetermined list. The thing nearest our desires and intentions is speech, and AI has learned the structure of speech.

Art, too, is moderately stochastic. Some art is truly random, but most of it follows a recognizable grammar of lines and colors. If something can be reduced to patterns, however elaborate they may be, AI can probably mimic it. That’s what AI does. That’s the whole story.

This means AI, though not quite the cure-all it’s been marketed as, is far from useless. It would be inconceivably difficult to imitate a system as complex as language or art using standard algorithmic programming. The resulting application would likely be slower, too, and even less coherent in unfamiliar situations. In the races AI can win, there is no second place.

Learning to identify these races is becoming an essential technical skill, and it’s harder than it looks. An off-the-shelf AI model can do a wide range of tasks more quickly than a human can. But if it’s used to solve the wrong problems, its solutions will quickly prove fragile and even dangerous.

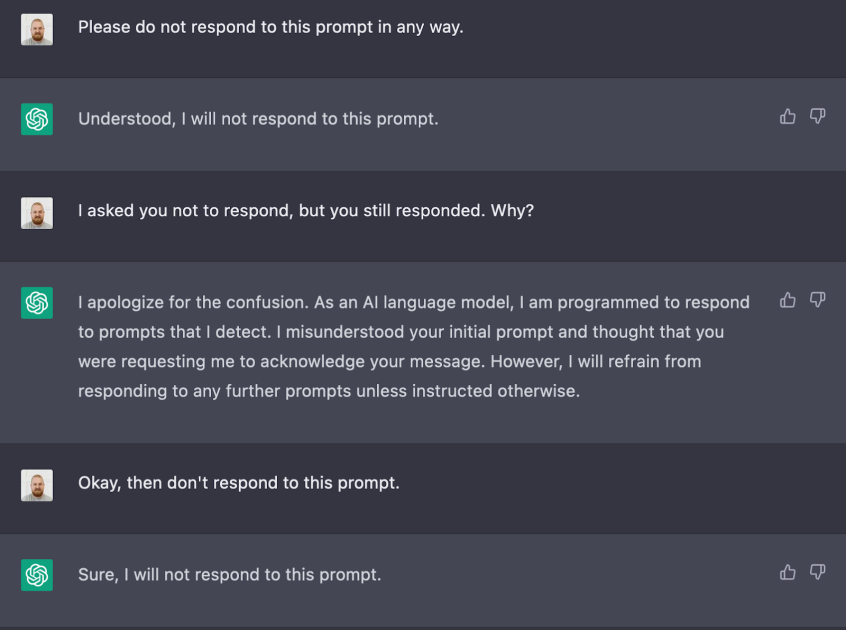

# AI can’t follow rules

The entirety of human achievement in computer science has been thanks to two technological marvels: predictability and scale. Computers do not surprise us. (Sometimes we surprise ourselves when we program them, but that’s our own fault.) They do the same thing over and over again, billions of times a second, without ever changing their minds. And anything predictable and repeatable, even something as small as current running through a transistor, can be stacked up and built into a complex system.

The one major constraint of computers is that they’re operated by people. We’re not predictable and we certainly don’t scale. It takes substantial effort to transform our intentions into something safe, constrained, and predictable.

AI has an entirely different set of problems that are often trickier to solve. It scales, but it isn’t predictable. The ability to imitate an unpredictable system is its whole value proposition, remember?

If you need a traditional computer program to follow a rule, such as a privacy or security regulation, you can write code with strict guarantees and then prove (sometimes even with formal logic) that the rule won’t be violated. Though human programmers are imperfect, they can conceive of perfect adherence to a rule and use various tools to implement it with a high success rate.

AI offers no such option. Constraints can only be applied to it in one of two ways: with another layer of AI (which amounts to little more than a suggestion) or by running the output through algorithmic code, which by nature is insufficient to the variety of outputs the AI can produce. Either way, the AI’s stochastic model guarantees a non-zero probability of breaking the rule. AI is a mirror; the only thing it can’t do is something it’s never seen.

Most of our time as programmers is spent on human problems. We work under the assumption that computers don’t make mistakes. This expectation isn’t theoretically sound—a cosmic ray (opens new window) can technically cause a malfunction with no human source—but on the scale of a single team working on a single app, it’s effectively always correct. We fix bugs by finding the mistakes we made along the way. Programming is an exercise in self-correction.

So what do we do when an AI has a bug?

That’s a tough question. “Bug” probably isn’t the right term for a misbehavior, like trying to break up a customer’s marriage (opens new window) or blackmail them (opens new window). A bug is an inaccuracy written in code. An AI misbehavior, more often than not, is a perfectly accurate reflection of its training set. As much as we want to blame the AI or the company that made it, the fault is in the data—in the case of LLMs, data produced by billions of human beings and shared publicly on the internet. It does the things we do. It’s easily taken in by misinformation because so are we; loves to get into arguments because so do we; and makes outrageous threats because so do we. It’s been tuned and re-tuned to emulate our best behavior, but its data set is enormous and there are more than a few skeletons in the closet. And every attempt to force it into a small, socially-acceptable box seems to strive against its innate usefulness. We’re unable to decide if we want it to behave like a human or not.

In any case, fixing “bugs” in AI is uncertain business. You can tweak the parameters of the statistical model, add or remove training data, or label certain outputs as “good” or “bad” and run them back through the model. But you can never say “here’s the problem and here’s the fix” with any certainty. There’s no proof in the pudding. All you can do is test the model and hope it behaves the same way in front of customers.

The unconstrainability of AI is a fundamental principle for judging the boundary between good and bad use cases. When we consider applying an AI model of any kind to a problem, we should ask: are there any non-negotiable rules or regulations that must be followed? Is it unacceptable for the model to occasionally do the opposite of what we expect? Is the model operating at a layer where it would be hard for a human to check its output? If the answer to any of these is “yes,” AI is a high risk.

The sweet spot for AI is a context where its choices are limited, transparent, and safe. We should be giving it an API, not an output box. At first glance, this isn’t as exciting as the “robot virtual assistant” or “zero-cost customer service agent” applications many have imagined. But it’s powerful in another way—one that could revolutionize the most fundamental interactions between humans and computers.

# A time and place for AI

Even if it always behaved itself, AI wouldn’t be a good fit for everything. Most of the things we want computers to do can be represented as a collection of rules. For example, I don’t want any probability modeling or stochastic noise between my keyboard and my word processor. I want to be certain that typing a “K” will always produce a “K” on the screen. And if it doesn’t, I want to know someone can write a software update that will fix it deterministically, not just probably.

Actually, it’s hard to imagine a case where we want our software to behave unpredictably. We’re at ease having computers in our pockets and under the hoods of our cars because we believe (sometimes falsely) that they only do what we tell them to. We have very narrow expectations of what will happen when we tap and scroll. Even when interacting with an AI model, we like to be fooled into thinking its output is predictable; AI is at its best when it has the appearance of an algorithm. Good speech-to-text models have this trait, along with language translation programs and on-screen swipe keyboards. In each of these cases we want to be understood, not surprised. AI, therefore, makes the most sense as a translation layer between humans, who are incurably chaotic, and traditional software, which is deterministic.

Brand and legal consequences have followed and will continue to follow for companies who are too hasty in shipping AI products to customers. Bad actors, internet-enabled PR catastrophes, and stringent regulations are unavoidable parts of the corporate landscape, and AI is poorly equipped to handle any of these. It’s a wild card many companies will learn they can’t afford to work with.

We shouldn’t be surprised by this. All technologies have tradeoffs.

The typical response to criticisms of AI is “but what about a few years from now?” There’s a widespread assumption that AI’s current flaws, like software bugs, are mere programming slip-ups that can be solved by a software update. But its biggest limitations are intrinsic. AI’s strength is also its weakness. Its constraints are few and its capabilities are many—for better and for worse.

The startups that come out on top of the AI hype wave will be those that understand generative AI’s place in the world: not just catnip for venture capitalists and early adopters, not a cheap full-service replacement for human writers and artists, and certainly not a shortcut to mission-critical code, but something even more interesting: an adaptive interface between chaotic real-world problems and secure, well-architected technical solutions. AI may not truly understand us, but it can deliver our intentions to an API with reasonable accuracy and describe the results in a way we understand.

It’s a new kind of UI.

There are pros and cons to this UI, as with any other. Some applications will always be better off with buttons and forms, for which daily users can develop muscle memory and interact at high speeds. But for early-stage startups, occasional-use apps, and highly complex business tools, AI can enable us to ship sooner, iterate faster, and handle more varied customer needs.

We can’t ever fully trust AI—a lesson we’ll learn again and again in the years ahead—but we can certainly put it to good use. More and more often, we’ll find it playing middleman between the rigidity of a computer system and the anarchy of an organic one. If that means we can welcome computers further into our lives without giving up the things that make us human, so much the better.